- #SPARK FOR MAC MANUAL HOW TO#

- #SPARK FOR MAC MANUAL INSTALL#

- #SPARK FOR MAC MANUAL DOWNLOAD#

- #SPARK FOR MAC MANUAL WINDOWS#

The Spark cluster mode overview explains the key concepts in running on a cluster.

#SPARK FOR MAC MANUAL HOW TO#

To learn more about Spark Connect and how to use it, see Spark Connect Overview. In Spark 3.4, Spark Connect provides DataFrame API coverage for PySpark and The separation betweenĬlient and server allows Spark and its open ecosystem to be leveraged from anywhere, embedded Spark Connect is a new client-server architecture introduced in Spark 3.4 that decouples SparkĬlient applications and allows remote connectivity to Spark clusters. Running Spark Client Applications Anywhere with Spark Connect bin/spark-submit examples/src/main/r/dataframe.R To run Spark interactively in a Python interpreter, use Python, Scala, Java, and R examples are in the Spark comes with several sample programs. This prevents the : or .(long, int) not available error when Apache Arrow uses Netty internally. When using the Scala API, it is necessary for applications to use the same version of Scala that Spark was compiled for.įor example, when using Scala 2.13, use Spark compiled for 2.13, and compile code/applications for Scala 2.13 as well.įor Java 11, setting =true is required for the Apache Arrow library. Java 8 prior to version 8u362 support is deprecated as of Spark 3.4.0. Python 3.7 support is deprecated as of Spark 3.4.0. It’s easy to run locally on one machine - all you need is to have java installed on your system PATH, or the JAVA_HOME environment variable pointing to a Java installation. This should include JVMs on x86_64 and ARM64. Linux, Mac OS), and it should run on any platform that runs a supported version of Java.

#SPARK FOR MAC MANUAL WINDOWS#

Spark runs on both Windows and UNIX-like systems (e.g.

#SPARK FOR MAC MANUAL INSTALL#

Scala and Java users can include Spark in their projects using its Maven coordinates and Python users can install Spark from PyPI.

#SPARK FOR MAC MANUAL DOWNLOAD#

Users can also download a “Hadoop free” binary and run Spark with any Hadoop version Downloads are pre-packaged for a handful of popular Hadoop versions. Spark uses Hadoop’s client libraries for HDFS and YARN. This documentation is for Spark version 3.4.0. Get Spark from the downloads page of the project website. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, pandas API on Spark for pandas workloads, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for incremental computation and stream processing. It provides high-level APIs in Java, Scala, Python, and R,Īnd an optimized engine that supports general execution graphs. Since you have successfully installed Apache Spark latest version, you can learn more about the Spark framework by following the below articles.Apache Spark is a unified analytics engine for large-scale data processing. Steps include installing Homebrew, Java, Scala, Apache Spark, and validating installation by running spark-shell. In this article, you have learned the step-by-step installation of Apache Spark latest version using Homebrew. Now access from your favorite web browser to access Spark Web UI to monitor your jobs.

For more examples on Apache Spark refer to PySpark Tutorial with Examples. Enter the following commands in the Spark Shell in the same order.

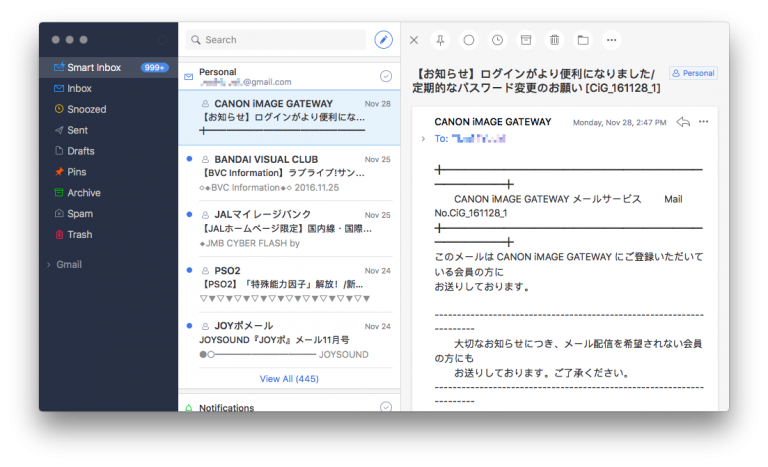

Let’s create a Spark DataFrame with some sample data to validate the installation. Note that it displays the Spark version and Java version you are using on the terminal. spark-shell is a CLI utility that comes with Apache Spark distribution. You should see something like this below (ignore the warning for now). After successful installation of Apache Spark latest version, run spark-shell from the command line to launch Spark shell.

0 kommentar(er)

0 kommentar(er)